Transaction volumes lie. User counts lie. TVL lies. Most on-chain metrics are inflated by bots, incentive programs, and coordinated wash trading.

Developer activity is harder to fake.

You can spin up a million wallets in an afternoon. You can't spin up a million meaningful commits. When I wanted to understand what was actually happening in blockchain ecosystems—not what the dashboards claimed—I started looking at the code.

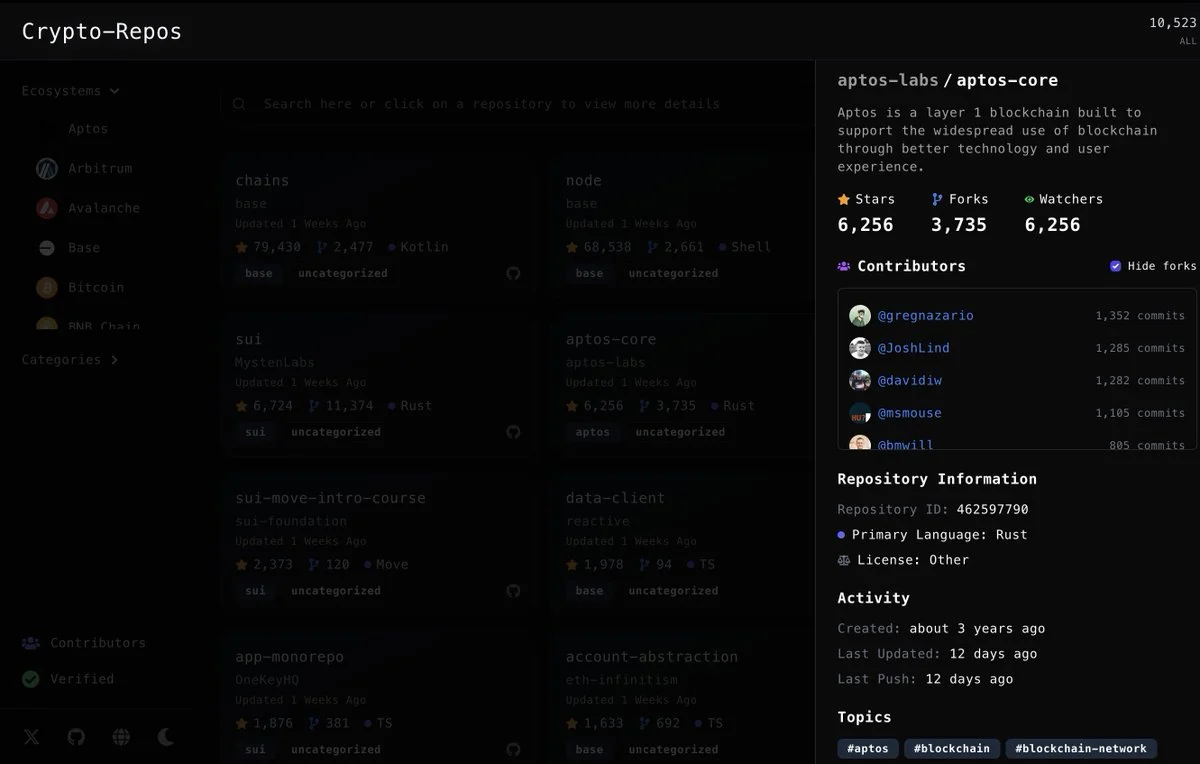

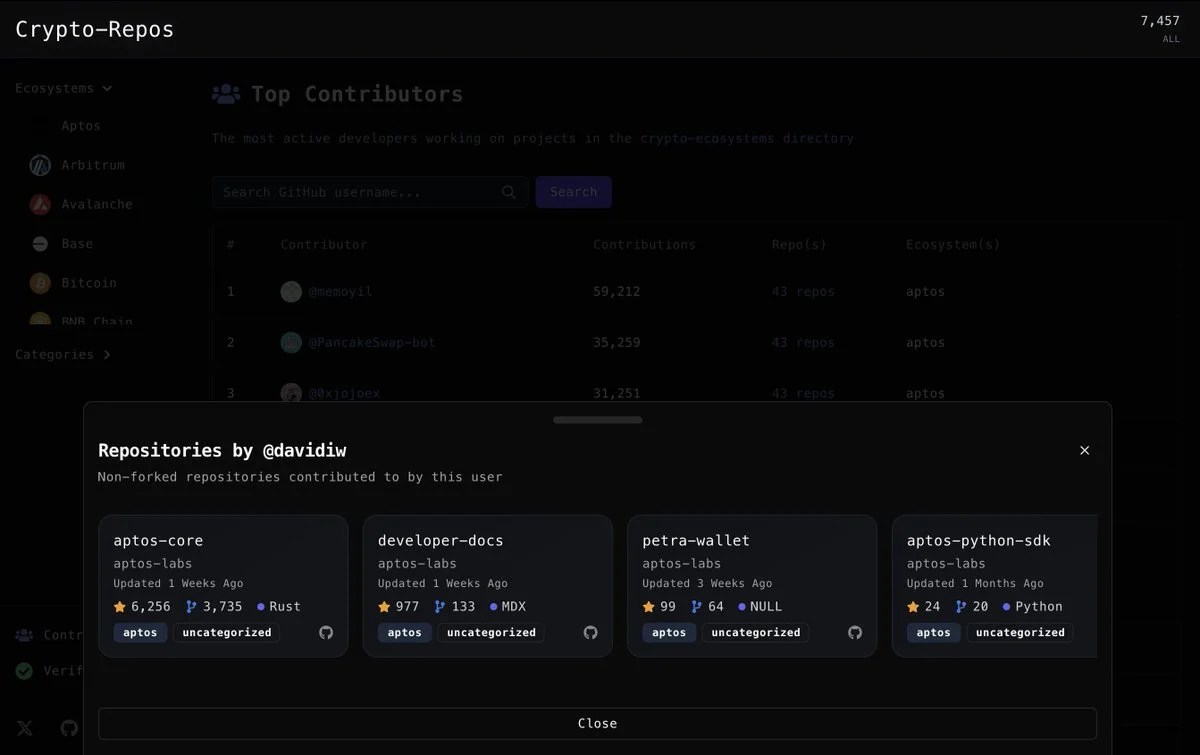

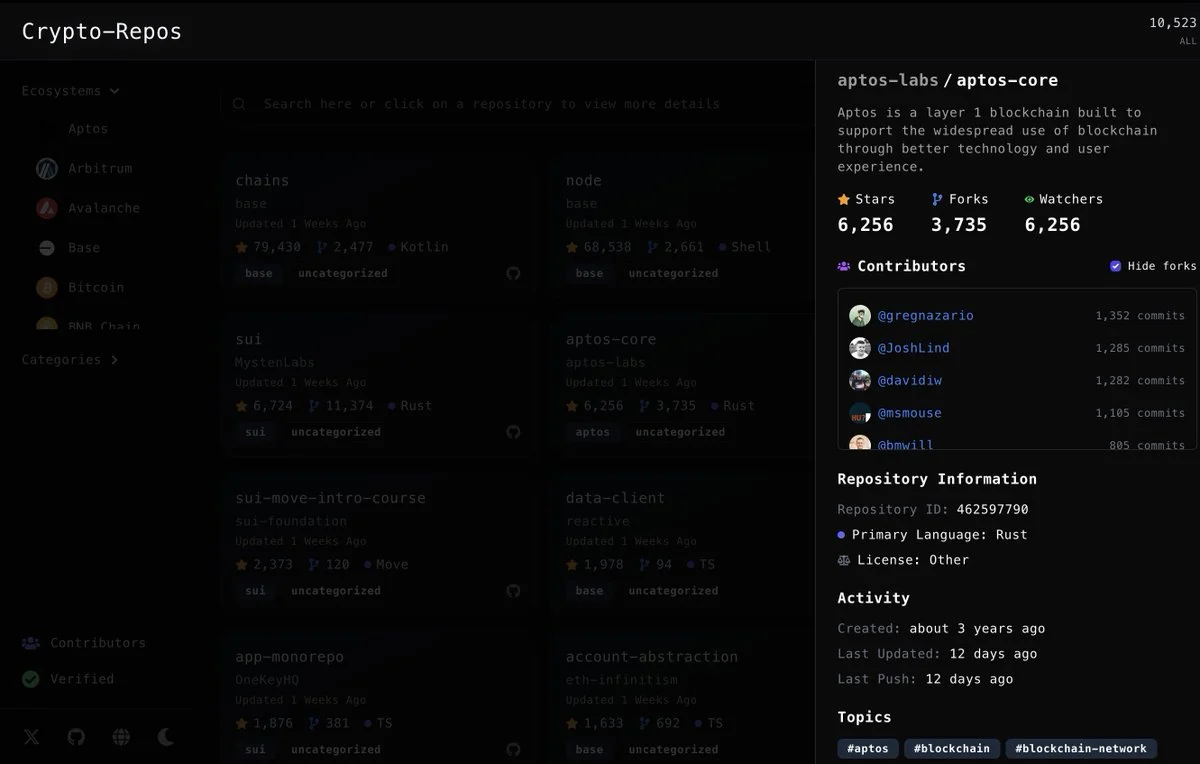

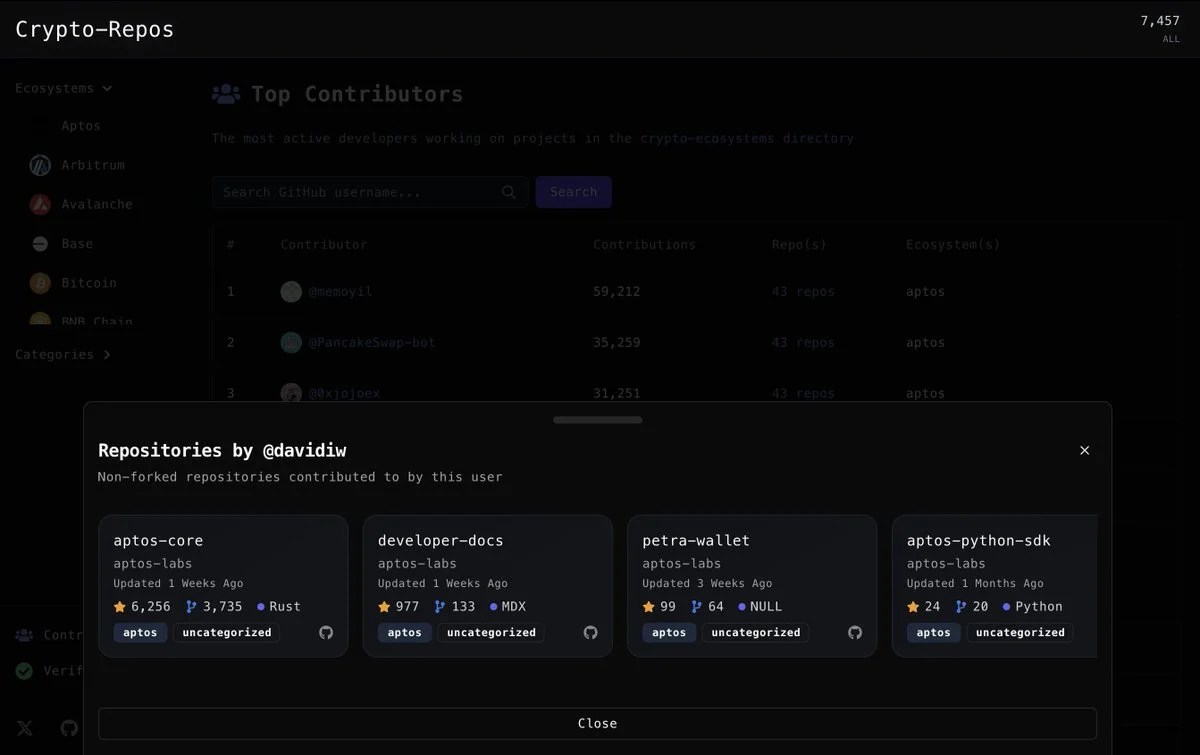

Crypto-Repos indexes over 10,500 repositories and 7,400 contributors across Aptos, Sui, and Base. It's built on Electric Capital's developer activity data, which is the closest thing to ground truth we have for measuring ecosystem health.

The Problem with On-Chain Metrics

Everyone games whatever gets measured. Transaction volumes get inflated by bots chasing airdrops. User counts get Sybil'd by farmers running thousands of wallets. TVL gets pumped through circular deposits where the same capital gets counted multiple times.

Pseudonymous accounts make it nearly impossible to tell real users from fake ones. And the incentives keep getting worse—the more a metric matters for token distributions or investor pitches, the more it gets manipulated.

Developer activity isn't immune to this. You could theoretically pay people to make meaningless commits. But it's expensive, it's slow, and it's hard to fake at the scale that matters. Meaningful code contributions require actual humans doing actual work.

What Crypto-Repos Does

The interface is straightforward: search repositories, filter by ecosystem or language, look at contributor histories. If you're a developer trying to find projects to work on, you can see what's active. If you're hiring, you can evaluate candidates by their actual commit history instead of their LinkedIn.

Shout out to @david_wolinsky

Shout out to @david_wolinsky

Where the Data Comes From

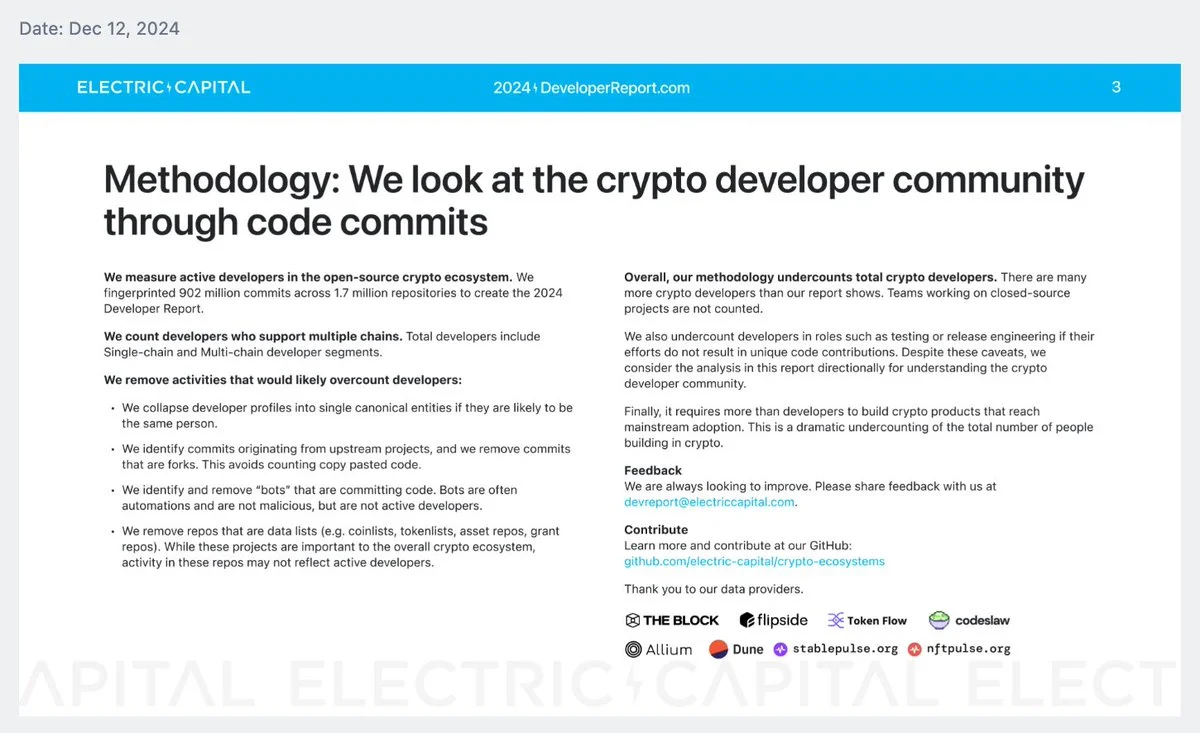

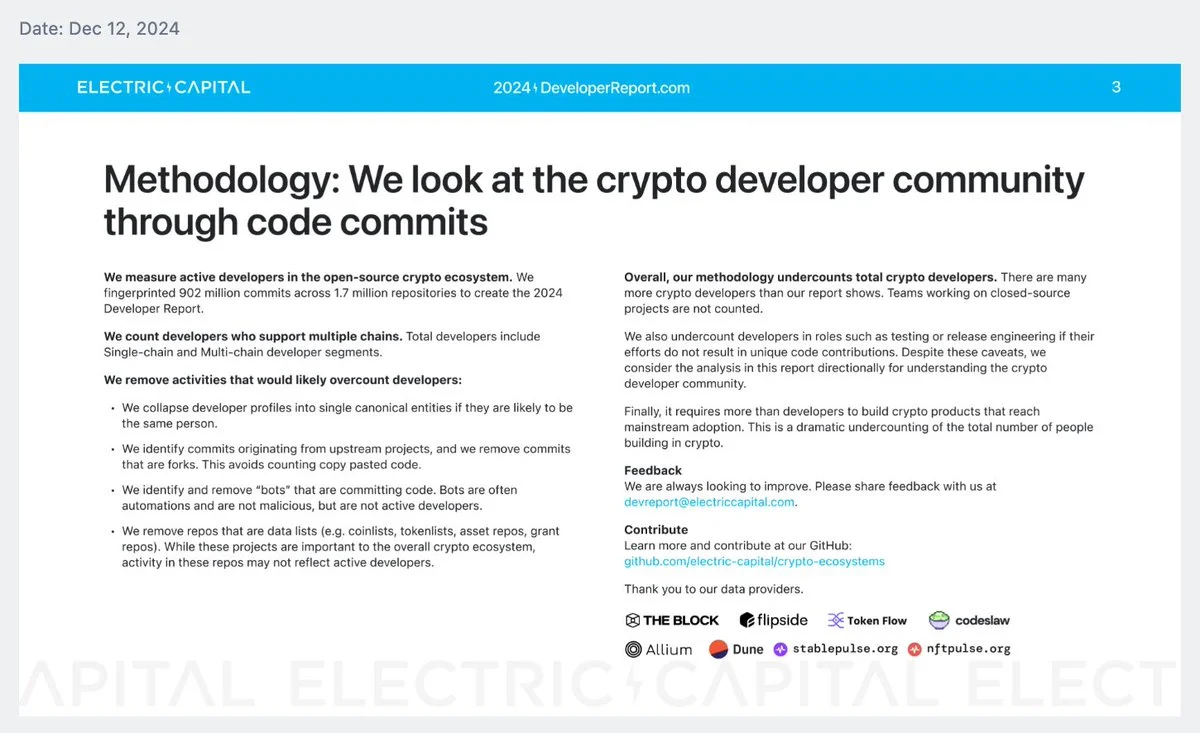

Electric Capital maintains a crypto-ecosystems repository that categorizes open-source blockchain development. Each ecosystem has a .toml file listing known repos. They've defined what counts as a "full-time developer" versus a "monthly active developer" and filter out low-quality contributions.

I'm using their data as the foundation and adding a searchable interface on top. Their annual reports are great for high-level trends, but I wanted something where you could drill into specific projects and people.

The Limits

Commit counts alone don't mean much. A high count might just be someone updating dependencies. A single well-placed contribution might matter more than a thousand minor fixes.

There's also the visibility problem. Not everything is open source. API providers I've talked to estimate the ratio of closed to open source code in crypto is about 2:1. Electric Capital only captures the public portion, so the real developer activity is roughly triple what's visible.

And some repos are misleading—forks that never get developed, templates that inflate counts, documentation-heavy projects that look active but aren't shipping features. The numbers need interpretation.

Current State

Right now it covers Aptos, Sui, and Base. I'm working on expanding to the other ecosystems Electric Capital tracks—there are over 200 of them.

The stack is Next.js and Tailwind on the front end, Neon (Postgres) and Hasura on the back end, deployed on Vercel. Nothing fancy.

What I want to add eventually: semantic search so you can query by concept instead of keyword, a reputation system for contributors, and ecosystem-specific bounty boards. But for now it's just the search interface.

Crypto-Repos

crypto

Measuring blockchains by what's harder to fake

2 min readApril 14, 2025

crypto

Transaction volumes lie. User counts lie. TVL lies. Most on-chain metrics are inflated by bots, incentive programs, and coordinated wash trading.

Developer activity is harder to fake.

You can spin up a million wallets in an afternoon. You can't spin up a million meaningful commits. When I wanted to understand what was actually happening in blockchain ecosystems—not what the dashboards claimed—I started looking at the code.

Crypto-Repos indexes over 10,500 repositories and 7,400 contributors across Aptos, Sui, and Base. It's built on Electric Capital's developer activity data, which is the closest thing to ground truth we have for measuring ecosystem health.

The Problem with On-Chain Metrics

Everyone games whatever gets measured. Transaction volumes get inflated by bots chasing airdrops. User counts get Sybil'd by farmers running thousands of wallets. TVL gets pumped through circular deposits where the same capital gets counted multiple times.

Pseudonymous accounts make it nearly impossible to tell real users from fake ones. And the incentives keep getting worse—the more a metric matters for token distributions or investor pitches, the more it gets manipulated.

Developer activity isn't immune to this. You could theoretically pay people to make meaningless commits. But it's expensive, it's slow, and it's hard to fake at the scale that matters. Meaningful code contributions require actual humans doing actual work.

What Crypto-Repos Does

The interface is straightforward: search repositories, filter by ecosystem or language, look at contributor histories. If you're a developer trying to find projects to work on, you can see what's active. If you're hiring, you can evaluate candidates by their actual commit history instead of their LinkedIn.

Shout out to @david_wolinsky

Shout out to @david_wolinsky

Where the Data Comes From

Electric Capital maintains a crypto-ecosystems repository that categorizes open-source blockchain development. Each ecosystem has a .toml file listing known repos. They've defined what counts as a "full-time developer" versus a "monthly active developer" and filter out low-quality contributions.

I'm using their data as the foundation and adding a searchable interface on top. Their annual reports are great for high-level trends, but I wanted something where you could drill into specific projects and people.

The Limits

Commit counts alone don't mean much. A high count might just be someone updating dependencies. A single well-placed contribution might matter more than a thousand minor fixes.

There's also the visibility problem. Not everything is open source. API providers I've talked to estimate the ratio of closed to open source code in crypto is about 2:1. Electric Capital only captures the public portion, so the real developer activity is roughly triple what's visible.

And some repos are misleading—forks that never get developed, templates that inflate counts, documentation-heavy projects that look active but aren't shipping features. The numbers need interpretation.

Current State

Right now it covers Aptos, Sui, and Base. I'm working on expanding to the other ecosystems Electric Capital tracks—there are over 200 of them.

The stack is Next.js and Tailwind on the front end, Neon (Postgres) and Hasura on the back end, deployed on Vercel. Nothing fancy.

What I want to add eventually: semantic search so you can query by concept instead of keyword, a reputation system for contributors, and ecosystem-specific bounty boards. But for now it's just the search interface.